This is the first blog post in a series about implementing a simple feedforward neural net.

The basis of a neural net is the neuron:

Inputs ,

, and

are fed into the neuron. The neuron has weights corresponding to each of these inputs:

,

, and

. The neuron also has a bias value

. The weights and biases are called the parameters of the neural net. Each input is multiplied by its corresponding weight. Those products are all summed up into one value, and then the bias is added to produce the weighted sum,

.

The weighted sum is then passed into some function called an “activation function”. There are different kinds of activation functions, but their fundamental property is that they “squash” the weighted sum. The activation function will yield a value between -1.0 and 1.0.

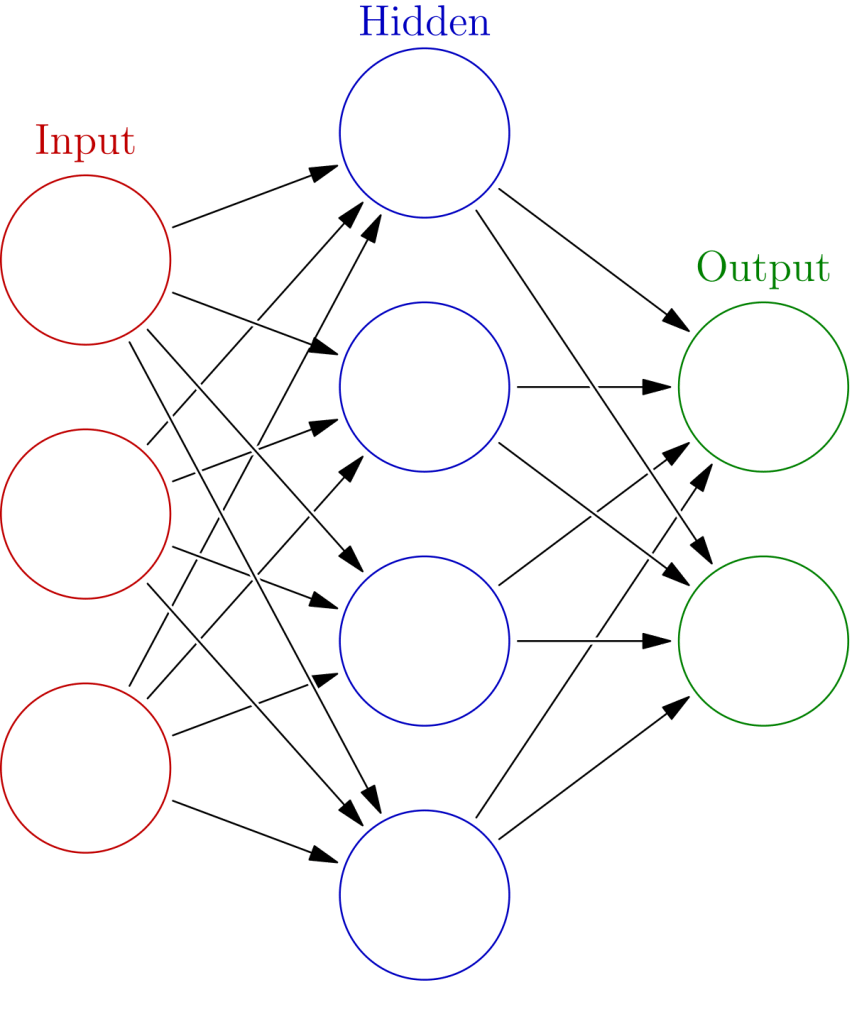

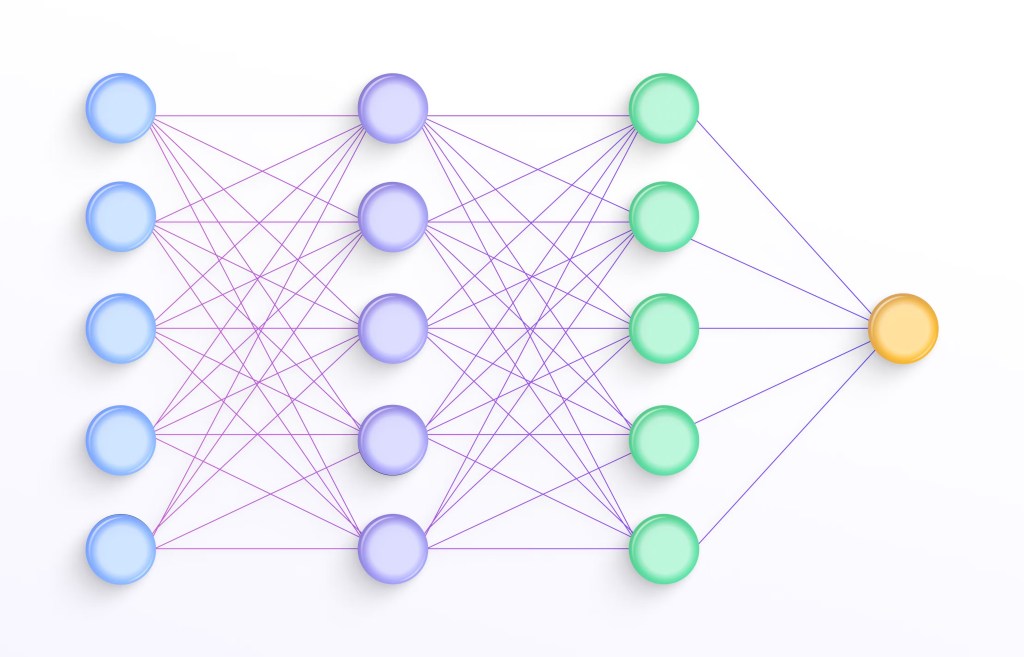

A neural net is made up of layers of these neurons. A typical neural net has at least 3 layers: an input layer, a hidden layer, and an output layer. Every net must have an input and an output layer. The number of hidden layers depends — neural nets with many hidden layers are called “deep neural nets”. It is important to note that the input layer does not actually have neurons. It is simply the input you pass into the net. It is often drawn in the same way as the other layers in the net, but it is important to keep this distinction in mind:

Here we see the layers of the neural net. Each circle represents a neuron. Each line corresponds to the weight of the connection between two neurons.

It is important to note that in this neural net there are no connections going backward. Inputs only have connections going forward to the hidden layer, and hidden layer neurons only have connections going forward to the output layer. This is what characterizes a “feed forward” neural net.

In the next post, we will begin by implementing the forward pass through the neural net. Our neural net will take some input and feed these values through the network to produce an output.

Leave a comment