ai

-

Introduction This is the first installment in a series of blog posts about Support Vector Machines. In these blog posts, I will give an overview of the mathematics behind, and implementation of Support Vector Machines which assumes only some mathematical background and almost no familiarity with machine learning libraries. To best understand these blog posts…

-

Diagonal Matrices A diagonal matrix is a matrix with non-zero entries along the diagonal, and zeros everywhere else: As a linear transformation, diagonal matrices are very easy to interpret. Vectors are stretched/scaled in the direction of the basis vectors by the non-zero entries of . Here is a visual example of what happens when you…

-

Every matrix represents a linear transformation. A special kind of matrix, called a diagonal matrix is very easy to interpret: It should be obvious why we call this a diagonal matrix. It has non-zero entries along the main diagonal and zeros everywhere else. It should also not take much to imagine what the linear transformation…

-

The loss function is a crucial component to training neural nets. It allows us to get a measure of how well our neural net is doing. Let’s take a look at the mean squared error loss: Even if you are unfamiliar with the mean squared error loss, it should hopefully be plausible to you that…

-

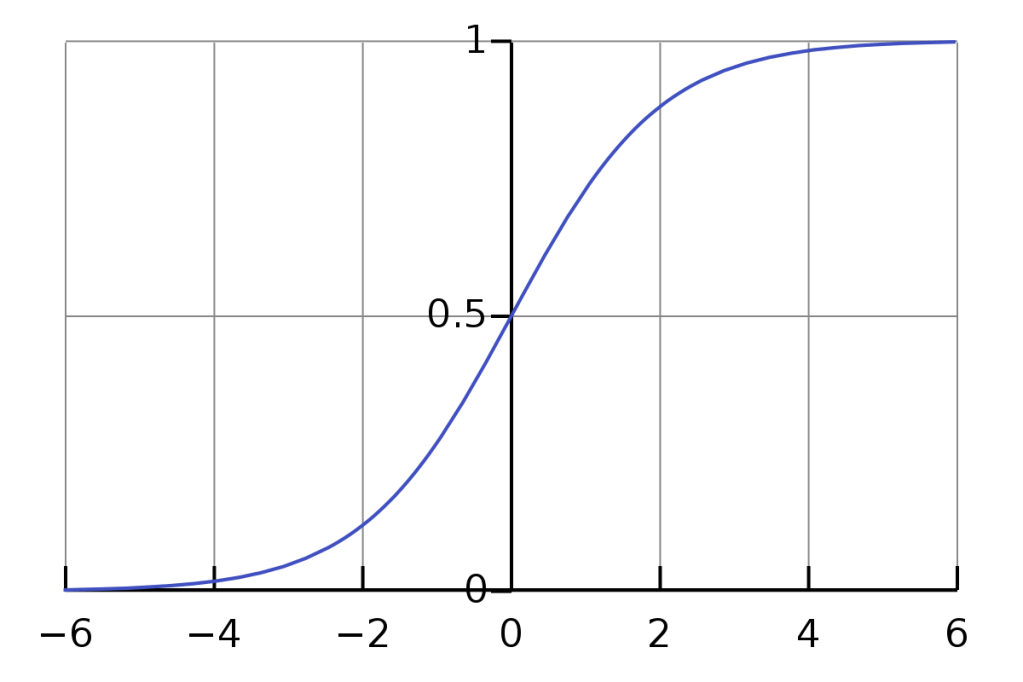

The sigmoid function, is a popular activation function for neurons in a neural net. It is necessary to use the derivative of the activation function when using backpropagation to compute the gradients of the weights and biases of the network. Here is how to find the derivative of the sigmoid function: Let’s take a look…

-

The Loss Function In order for our neural net to “learn” we first need some measure of how it is doing. This is called the loss function. It measures how far off the output of our net is from what we expect it to be. There are many kinds of loss function but they all…

-

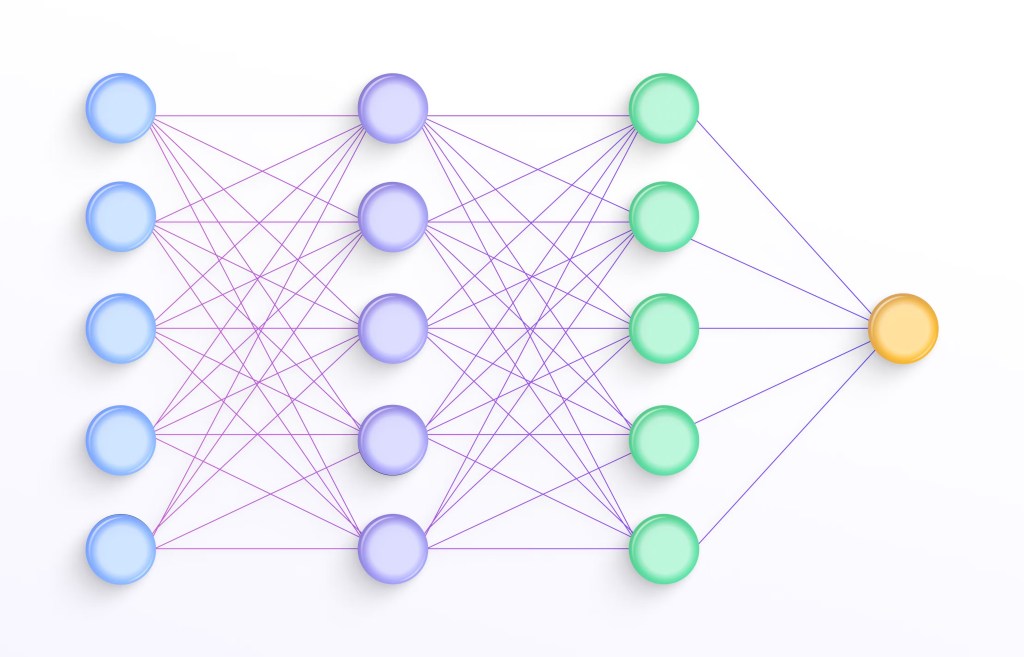

In this post we will begin implementing our neural net. We will start by implementing the basic data structures that comprise our neural net. Then I explain what exactly happens during the forward pass, followed by the code. The Neural Net Class First thing’s first we need a neural net: Each layer in our net…