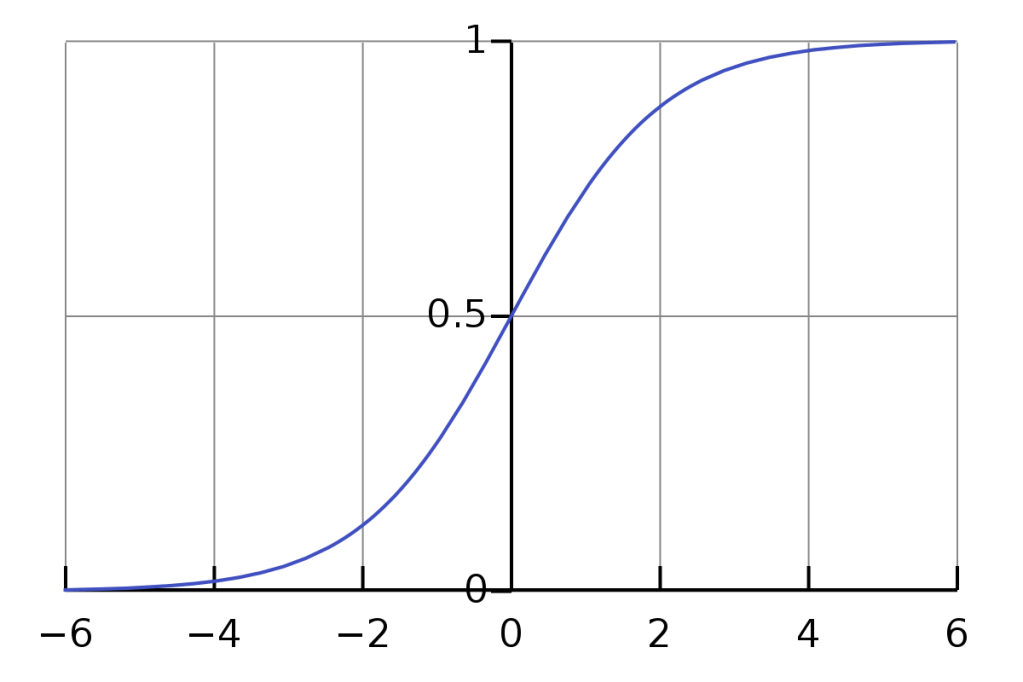

The sigmoid function, is a popular activation function for neurons in a neural net.

It is necessary to use the derivative of the activation function when using backpropagation to compute the gradients of the weights and biases of the network.

Here is how to find the derivative of the sigmoid function:

Let’s take a look at the equation for the sigmoid function:

To begin, let’s rewrite this fraction as a negative exponent:

Now we can apply the chain rule:

The “inner function” is:

The “outer function” is:

So, first we find the derivative of the “inner function”:

Now we find the derivative of the “outer function” if the “inner function” were just a variable:

Now, as per the chain rule, we multiply them together:

Let’s convert our negative exponent back to a fraction:

Let’s factor this into two pieces:

We can rewrite the numerator of the second part to look like this:

All we did was just add 1 to the numerator and then subtract 1. This does not change the value at all, but it allows us to factor like this:

Simplifying further gives us:

We know that: , so we can substitute into our equation which finally gives us:

Leave a reply to The Cross-Entropy Loss Function – Robert Vagene's Blog Cancel reply